Infrastructure as Code (IaC) as in cloud infrastructure, not infrastructure code as in writing your own middleware library.

Is it a misnomer?

In most cases IaC refers to some kind of template-based solution.

Template in this article refers to AWS CloudFormation template

It is strongly debatable if templates are code. If anything, code inside templates is generally an anti-pattern.

Think of templates as an “intermediary language” rather than code.

Templates are executed by respective platform “engines” driving actual cloud infrastructure configuration management.

Consider it “code” as far as an asset managed in version control together with the rest of the source code.

Templates

Templates will be sufficient for most infrastructure (IaC) jobs. Most templating languages will have constructs to express logic, for example: conditions. Most will also allow to include inline code as part of the configuration, nonetheless it is code mixed with template, for example: AWS velocity template for DynamoDB.

Writing Infrastructure as Code templates this way – in YAML or JSON – has been reasonable, but not without concerns. A good article on the topic is In defense of YAML.

Templates for templates

The AWS SAM is a good example of simplifying CloudFormation templates, notice the Transform: 'AWS::Serverless-2016-10-31' elements in SAM. A single SAM element may transform into a list of CloudFormation elements deploying a series of resources as a result.

Another example is serverless.com their templating language supports multiple platforms while also simplifies the templating compared to CloudFormation.

One of my concerns however is the mixing of Infrastructure as Code with Function as a Service definitions. For example, the definition of a function AWS::Serverless::Function in the same place with an Gateway API AWS::Serverless::Api and related Usage Plan AWS::ApiGateway::UsagePlan.

I would like to keep application and infrastructure concerns separate.

My immediate approach was going to be to split up the template into multiple files and use AWS::Include to bring them back together.AWS::Include does not work for all parts of the schema. Trying to use AWS:Include under Resources to include a set of functions simply does not work.

Next approach was going to be Nested Stacks. It is the recommended approach for large stacks anyway, so it seemed like a winner. It turns out Nested stacks are great for reusable templates – see Use Nested Stacks to Create Reusable Templates and Support Role Specialization – not so much for decomposing an application (template).

Using actual code for infrastructure

The AWS API gives access to the platform and resources through a range of SDK-s (Python SDK is called boto3). It is truly a low level access to resources that is typically used for developing software on the platform.

Infrastructure could be managed using the SDK. There are many good automation examples on how AWS Lambda can react to events from the infrastructure and respond with changes to it.

Managing more than a few resources using the SDK is not feasible, considering the coordination it would require: dependencies, delay in resource setup.

There is a better approach: code

The troposphere library allows for easier creation of the AWS CloudFormation JSON by writing Python code to describe the AWS resources.

The GitHub project has many good examples

Using troposphere for Infrastructure as Code

The examples would led you to believe that using troposphere is not much different than writing a CloudFront or SAM template in Python. Depending on your use case however, there are opportunities to explore:

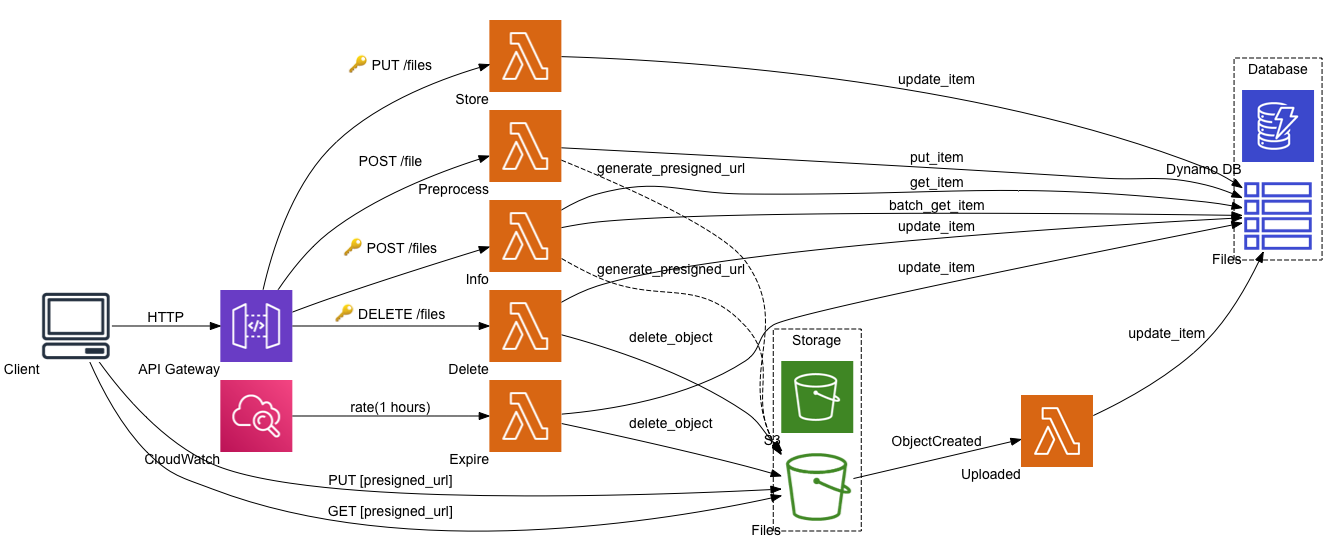

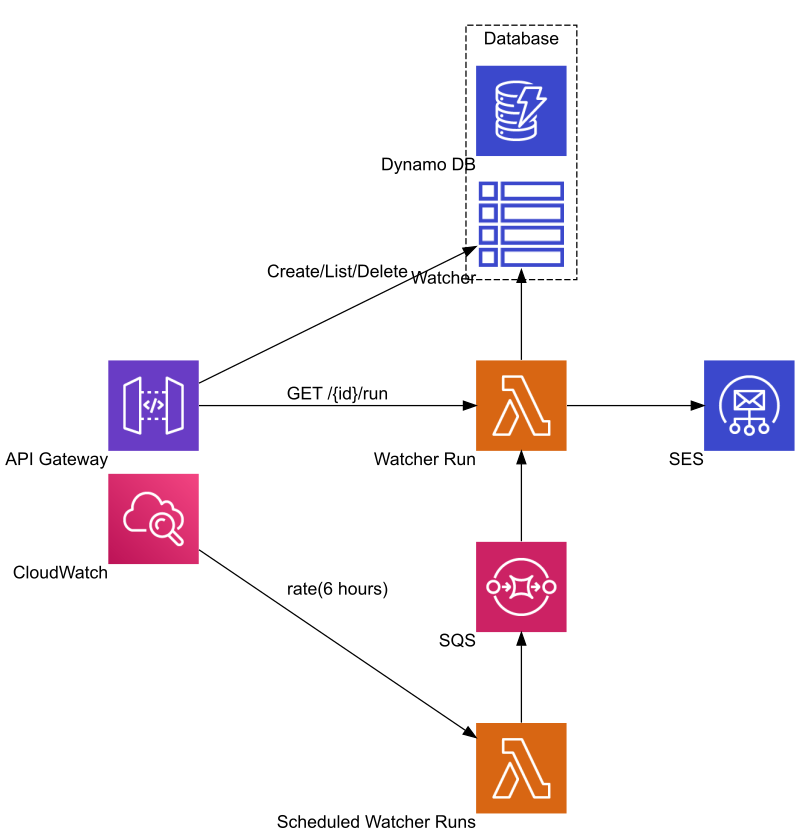

- Function as a Service (FaaS) implementations are heavily influenced by deployment. It would make sense if the deployment details were close – ideally right next – to the code.

- In a similar fashion database – for example DynamoDB – resource definition could be close to the ORM or data access implementation.

- Make infrastructure and deployment decisions in code. There is of course conditions in CloudFormation, and CloudFormation macros for more complex processing.

Having the infrastructure and deployment configuration close to the implementation code has its own pros and cons.

Development time requirements

Ideally troposphere would only be used in development time (not runtime if we can avoid it), therefore the deployment package would not include this library.

# Development requirements, not for Lambda runtime

# pip install -r requirements-dev.txt

awacs==0.9.0

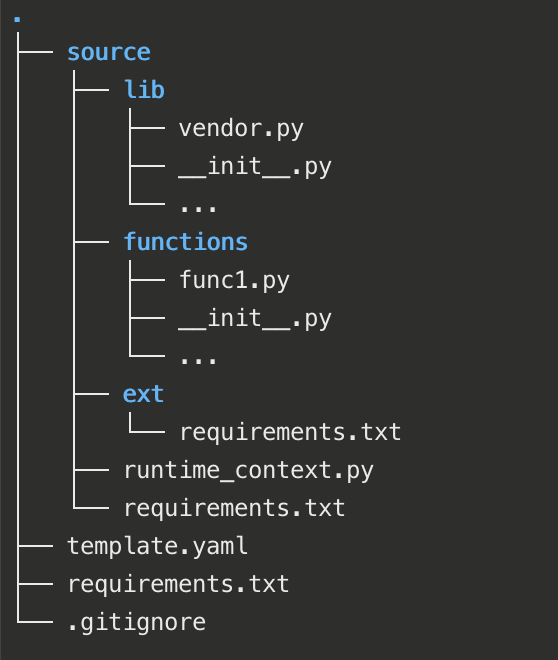

troposphere==2.4.6I use template.py in the root of the project, the same place where the template.yaml (or .json) would be, for producing the CloudFormation template.

I have followed two patterns for defining platform resources in the source code.

Class type resource definition

The resource definitions are part of the class implementation.

Note that the troposphere library is only imported at the time of getting the resources. Then it is only used when generating the CloudFormation template. None of these would need to run in the lambda runtime.

# the application component

class ApplicationComponent(...):

# class attributes and methods

# ...

@classmethod

def cf_resources(cls):

from troposphere import serverless

# return an array of resources associated with the application component

return [...]

# the template generator

from troposphere import Template

t = Template()

t.set_transform('AWS::Serverless-2016-10-31')

for r in ApplicationComponent.cf_resources():

t.add_resource(r)

print(t.to_yaml())Decorator type resource definition

I use decorators for Lambda function implementations. The decorator registers a function (on the function) returning the associated resources (array).

# the wrapper

def cf_function(**func_args):

def wrap(f):

def wrapped_f(*args, **kwargs):

return f(*args, **kwargs)

def cf_resources():

from troposphere import serverless

# use relevant arguments from func_args

f = serverless.Function(...) # include all necessary parameters

# add any other resources

return [f]

wrapped_f.cf_resources = cf_resources

return wrapped_f

return wrap

# the lambda function definition

@cf_function(...) # add any arguments

def lambda1(event, context):

# implementation

return {

'statusCode': 200

}

# the template generator

from troposphere import Template

t = Template()

t.set_transform('AWS::Serverless-2016-10-31')

for r in lambda1.cf_resources():

t.add_resource(r)

print(t.to_yaml())

You could implement lambda handlers in classes by wrapping them as Ben Kehoeshows in his [Gist]

(https://gist.github.com/benkehoe/efb75a793f11d071b36fed155f017c8f).

Conclusion

AWS recently released the alpha version of their Cloud Development Kit (CDK). I have not tried it yet, but the Python CDK looks super similar to troposphere. Of course, they both represent the same CloudFormation resource definition in Python.

troposphere or AWS CDK, they both bring a set of new use cases for managing cloud infrastructure and let us truly define Infrastructure as Code.

I will explore the use of troposphere on my next project.